How to Upload Large Files to Amazon S3 with AWS CLI

Amazon S3 is a widely used public cloud storage system. S3 allows an object/file to be up to 5TB which is enough for most applications. The AWS Management Console provides a Web-based interface for users to upload and manage files in S3 buckets. However, uploading a large files that is 100s of GB is not easy using the Web interface. From my experience, it fails frequently. There are various third party commercial tools that claims to help people upload large files to Amazon S3 and Amazon also provides a Multipart Upload API which is most of these tools based on.

While these tools are helpful, they are not free and AWS already provides users a pretty good tool for uploading large files to S3—the open source aws s3 CLI tool from Amazon. From my test, the aws s3 command line tool can achieve more than 7MB/s uploading speed in a shared 100Mbps network, which should be good enough for many situations and network environments. In this post, I will give a tutorial on uploading large files to Amazon S3 with the aws command line tool.

Install aws CLI tool

Assume that you already have Python environment set up on your computer. You can install aws tools using using the bundled installerpip or

$ curl "https://s3.amazonaws.com/aws-cli/awscli-bundle.zip" -o "awscli-bundle.zip"

$ unzip awscli-bundle.zip

$ sudo ./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/aws

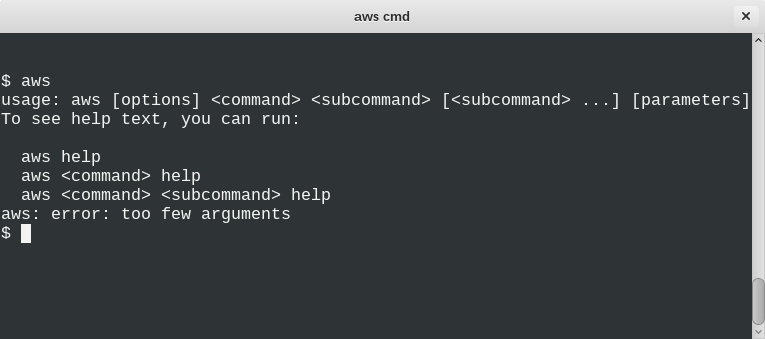

Try to run aws after installation. If you see output as follows, you should have installed it successfully.

$ aws

usage: aws [options] <command> <subcommand> [<subcommand> ...] [parameters]

To see help text, you can run:

aws help

aws <command> help

aws <command> <subcommand> help

aws: error: too few argumentsConfigure aws tool access

The quickest way to configure the AWS CLI is to run the aws configure command:

$ aws configure

AWS Access Key ID: foo

AWS Secret Access Key: bar

Default region name [us-west-2]: us-west-2

Default output format [None]: jsonHere, your AWS Access Key ID and AWS Secret Access Key can be found in Your Security Credentials on the AWS Console.

Uploading large files

Lastly, the fun comes. Here, assume we are uploading the large ./150GB.data to s3://systut-data-test/store_dir/ (that is, directory store-dir under bucket systut-data-test) and the bucket and directory are already created on S3. The command is:

$ aws s3 cp ./150GB.data s3://systut-data-test/store_dir/After it starts to upload the file, it will print the progress message like

Completed 1 part(s) with ... file(s) remainingat the beginning, and the progress message as follows when it is reaching the end.

Completed 9896 of 9896 part(s) with 1 file(s) remainingAfter it successfully uploads the file, it will print a message like

upload: ./150GB.data to s3://systut-data-test/store_dir/150GB.dataaws has more commands to operate files on S3. I hope this tutorial helps you start with it. Check the manual for more details.

To upload a directory recursively, you may use `aws s3 sync`. For example, to upload current directory to my-bucket bucket under dir my-dir:

$ aws s3 sync . s3://my-bucket/my-dir/

Hey Eric, is there a parameter available for the above command that would allow me to enforce TLS 1.2 encryption in-transit?

I am not aware of such one. You may need to dig into the source code of aws-cli which is available at https://github.com/aws/aws-cli to investigate or make patch to enforce TLS 1.2.

how do I sync between an sftp location and s3 bucket directly?

You may consider a solution like this:

1. Mount the sftp location by sshfs http://www.systutorials.com/1505/mounting-remote-folder-through-ssh/ to a local directory.

2. Use the tool in this post to upload the file to sync the local directory (mounted the sftp location) with your S3 bucket.

What happens when a large file upload fails?? This is not covered.

I’ve been getting segfaults using the straight cp command, and re-running it will start again from the beginning. On large files this can mean days wasted.

Stumbled upon this while looking for solutions to upload large files.

Check this link: https://aws.amazon.com/premiumsupport/knowledge-center/s3-multipart-upload-cli/

If your cp process keeps dying, you may want to implicitly break it apart with the lower level s3api command set.

How do i upload a image file from my local folder to s3 bucket via command prompt.

Please help to provide CLI commands.